It’s always rewarding when someone reaches out to learn from your experiences. Vikram, a UW student, invited me to speak about my journey, and I couldn’t say no.

My journey started at IIT Guwahati, where I built a strong foundation in concepts and technology. After two decades in the industry, I gained valuable experience in software engineering, leadership and the nature of Life. My love for Space, Physics, and Math, combined with my background in software engineering and the rapid advancements in AI over the last two years, inspired me to incept Godel Space. Fascinated by Gödel’s work on incompleteness, I’ve learned that true understanding often lies beyond what can be proven — a principle that shapes how I approach problem-solving today.

I wanted to motivate the students to pursue entrepreneurship—this is one of the most opportunistic times to build something meaningful.

I also wanted to pass along the wisdom my mentor shared with me:

“The objective in life is not to obtain the highest power, money, fame or pleasure; instead it is to increase your understanding of life (physical, intellectual, emotional, social worlds) and to contribute to the application of that knowledge for the development of human civilization that cares for all living beings and Earth.”

https://lnkd.in/g6zJTQHi

https://lnkd.in/g3rDnCsG

hashtag#SpaceTech hashtag#Entrepreneurship hashtag#NextGen

Category: Science and Tech

Axiomatic Thinking and AI: Lessons from OpenAI’s Recent Turbulence

This Thanksgiving, I find myself reflecting with gratitude on the education I have received. It’s this education that has provided me with a unique lens through which to view and comprehend the world, equipping me with timeless knowledge that enables me to connect the dots. In this spirit of inquiry, I aim to discuss various aspects of the recent developments at OpenAI.

Unfolding Drama

- Discussion at APEC CEO Summit: Sam Altman, alongside rivals from Google and Meta Platforms, participated in a panel discussion about the future of AI at the APEC CEO Summit in San Francisco.

- Internal Concerns at OpenAI: Tensions were growing within OpenAI due to Altman’s approach to safety, commercialization, and his investments in other AI companies.

- Sudden Board Meeting and Firing: Shortly after the APEC panel, Altman was invited to a surprise video meeting by Ilya Sutskever, where he was informed of his firing by the board. The board constituted: {Helen, Adam, Tasha, Sam, Greg}

- Public Announcement and Leadership Changes: OpenAI released a statement announcing Altman’s removal, citing issues with his candidness. Mira Murati was appointed as interim CEO.

- Reaction from Microsoft and Investors: Microsoft, a major investor in OpenAI, and other leaders in the tech industry were caught off guard by the news.

- Employee and Investor Backlash: OpenAI employees and external supporters expressed shock and disapproval of the board’s decision, leading to resignations and public statements of support for Altman.

- Attempts to Stabilize the Company: OpenAI’s senior leadership attempted to reassure employees and stakeholders of the company’s stability.

- Microsoft’s Offer to Altman and Brockman: On Monday, amid the ongoing turmoil at OpenAI and negotiations for Sam Altman’s return, Microsoft CEO Satya Nadella announced that Altman and Greg Brockman would join Microsoft to lead a new advanced AI research team. This announcement indicated that regardless of whether Altman and Brockman returned to their roles at OpenAI, they had the option to take on significant positions at Microsoft, reflecting the tech giant’s confidence in their leadership and expertise in AI.

- Negotiations for Altman’s Return: Amidst widespread support for Altman, discussions began about the possibility of his return. Major investors and Microsoft played key roles in these negotiations.

- Board Reorganization: Talks focused on reorganizing the board as a condition for Altman’s return.

- Employee Ultimatum: Over 700 OpenAI employees signed an open letter threatening to resign unless the board resigned and reinstated Altman.

- Altman’s Reinstatement: Following intense negotiations and internal pressure, an agreement was reached for Altman to return as CEO of OpenAI. The board was restructured, with Bret Taylor as the new chair.

- Celebration and Return to Normalcy: Upon Altman’s reinstatement, employees gathered to celebrate, signaling a return to stability and normal operations at OpenAI but with a different set of leaders on the board {Brad, Larry, Adam}. Sam not on the board upon return

OpenAI Entity Structure

While the aforementioned observations detail the events of the past week, they are underpinned by a foundational structure that governs the actions of the various stakeholders involved. Presented here is an analysis of OpenAI’s organizational framework, which, while unconventional, operates entirely within legal parameters.

- Board of Directors: This group is responsible for making the high-level decisions that guide the nonprofit arm of OpenAI. They have the authority to hire and fire executives, like the CEO, and set the organization’s strategic direction.

- OpenAI, Inc. (OpenAI Nonprofit): This is the nonprofit entity that operates under section 501(c)(3) of the U.S. tax code. Its goal is to promote and develop friendly AI in a way that benefits humanity as a whole, rather than serving the financial interest of its owners.

- OpenAI GP LLC: This is the general partnership entity that likely manages the OpenAI Limited Partnership (LP). The GP is responsible for the day-to-day operations and investment decisions of the LP.

- Holding Company for OpenAI Nonprofit + employees + investors: This entity is a holding company which means it holds the assets (ownership stakes) of other companies. It is owned by the OpenAI nonprofit, employees, and other investors. This is a structure often used to consolidate control over multiple entities and align the interests of various stakeholders.

- OpenAI Global, LLC (capped profit company): This is the for-profit arm of OpenAI that operates with a “capped profit” structure, meaning there’s a limit to the financial profits investors can receive. Its aim is to fund the mission of the nonprofit through profitable ventures while limiting the influence of profit-maximizing motives.

- Microsoft: This reflects a significant investment in, and partnership with, OpenAI, providing financial support and potentially influencing its strategic direction.

- Employees & other investors: These are individuals who have invested in the holding company and may have equity stakes in the success of OpenAI. Their role is typically to contribute to the company’s operations, and they have a vested interest in its success.

Each of these entities in turn could have their own charters, bylaws and operating agreements, which define at some level of abstraction how the handshakes between themselves and other external entities happen.

Formal and Informal Systems

A formal system in the context of mathematics, logic, and computer science, is a set of rules and symbols used to construct a logical framework. The key characteristics of a formal system include:

- Alphabet: A finite set of symbols from which strings (sequences of symbols) can be formed.

- Axioms: A set of initial expressions or statements assumed to be true within the system.

- Rules of Inference: Precise, well-defined rules that specify how to derive new expressions or statements (theorems) from existing ones.

- Syntax: The rules that govern the formation of valid strings of symbols within the system.

- Semantics: The meaning or interpretation of the strings and symbols within the system.

In a formal system, every proof or derivation is a finite sequence of steps, each of which applies a rule of inference to axioms or previously derived theorems/lemma to produce new theorems. These systems form the basis for mathematical logic and theoretical computer science, enabling rigorous and unambiguous reasoning about mathematical and logical propositions. The “axioms” lay the foundational rules and principles from which the entire structure logically follows. They are considered the undisputed truths within the system, intended to guide the interpretation and implementation of the system’s more detailed rules and provisions. Once we have axioms, there exist rules of inference. They form the backbone of deductive reasoning, providing the means to infer new truths from established ones.

- Modus Ponens: If ‘P implies Q’ (P → Q) and ‘P’ is true, then ‘Q’ must be true.

- Modus Tollens: If ‘P implies Q’ (P → Q) and ‘Q’ is false, then ‘P’ must also be false.

- Chain Rule: If ‘P implies Q’ (P → Q) and ‘Q implies R’ (Q → R), then ‘P implies R’ (P → R).

- Universal Generalization: If a statement is true for all instances that have been observed, it can be generalized to all instances (within the bounds of the logical system).

- Existential Instantiation: If it is known that ‘there exists an x such that P(x) is true’, then we can infer that ‘P(a)’ is true for some specific instance ‘a’.

- and few others

While the legal entities are not formal systems in a theoretical sense, they do operate within a framework of laws and regulations that define their creation, governance, operation, and dissolution. In the case of an entity structure like the one used by OpenAI, the “axioms” would be the fundamental agreements and legal structures that define the organization:

- Corporate Charter: The formal document that establishes a corporation’s existence.

- Bylaws: The rules governing the operation of the corporation.

- Operating Agreements: For LLCs, this document outlines the financial and functional decisions of the business, including rules, regulations, and provisions.

- Partnership Agreements: Documents that set forth the terms of the partnership, such as profit sharing, decision-making processes, and dissolution terms.

- Investment Agreements: These dictate the terms under which investors contribute to the company and what they receive in return, such as equity shares.

- Mission Statement: While not a legal document, this is a declaration of the organization’s core purpose and focus, which influences all other agreements and actions.

In both cases, However, just as Gödel identified potential inconsistencies in mathematical systems, one could, in theory, scrutinize these legal and organizational axioms for logical consistency and coherence with their applied outcomes.

Gödel’s Work

Gödel’s work showed that there are intrinsic limitations to what can be known or proven within mathematical systems, highlighting the inherent complexities and mysteries in the foundations of mathematics. The following are his theorems

- First Incompleteness Theorem: In any consistent, formal axiomatic system that is strong enough to include arithmetic, there exist statements that can be neither proven nor disproven within the system. In other words, there will always be undecidable statements or propositions within such systems.

- Second Incompleteness Theorem: No consistent formal axiomatic system can prove its own consistency. This implies that if a system is consistent, it cannot be used to prove that it is free from contradictions or inconsistencies.

The story of Gödel’s Citizenship

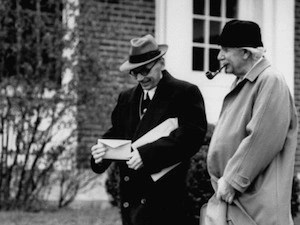

Kurt Gödel purportedly discovered an inconsistency in the U.S. Constitution occurred when Gödel was preparing for his U.S. citizenship exam. Gödel, an Austrian-born logician and mathematician who had fled Europe during World War II, had settled in Princeton, New Jersey, and was applying for naturalization. His profound understanding of logic and systems naturally led him to scrutinize the principles of the U.S. Constitution while studying for his citizenship test.

According to accounts from his colleagues, such as the mathematician Oskar Morgenstern who accompanied Gödel to his citizenship hearing, Gödel mentioned to Morgenstern that while studying the Constitution, he had found a logical contradiction that could potentially allow a dictatorship to emerge within the legal framework of the Constitution.

On the day of the examination, Morgenstern and Albert Einstein, who was also present, feared Gödel might actually bring up his discovery during the citizenship exam. The story goes that during the hearing, the judge asked Gödel if he thought a dictatorship like the one in Germany could happen in the U.S., and Gödel started to explain his discovery of a loophole that could allow for such an event. However, the judge, noticing where the conversation was heading and not wanting to delve into theoretical discussions, deftly interrupted Gödel and moved the conversation along, averting any detailed exposition on the matter.

In the context of Gödel’s work and his views on the U.S. Constitution, “axioms” refer to:

- The Preamble: This sets the stage for the Constitution’s purpose and introduces the guiding principles of justice, peace, defense, welfare, liberty, and prosperity.

- The Articles: These establish the structure of the federal government, its powers, and the relationship between the states and the federal entity.

- The Amendments: Including the Bill of Rights and subsequent amendments, these are the changes and clarifications to the Constitution that have been ratified over time.

- The Federal Structure: The division of power between the executive, legislative, and judicial branches, known as the system of checks and balances.

- Rule of Law: The principle that the law applies to everyone, including those who govern.

- Popular Sovereignty: The idea that the authority of the government is created and sustained by the consent of its people.

In the context of a legal framework like the U.S. Constitution, the “rules of inference” are not as formally defined as in mathematical logic, but the reasoning process is somewhat analogous. Legal rules of inference include principles of statutory interpretation, precedent (stare decisis), and the application of legal doctrines that guide how the Constitution and laws are to be understood and applied. This involves:

- Literal Rule: Taking the plain, ordinary meaning of the text as the basis for interpretation.

- Golden Rule: Modifying the literal meaning to avoid an absurd result.

- Mischief Rule: Considering what “mischief” the statute was intended to prevent.

- Precedent and Stare Decisis: Following previous court decisions to ensure consistency.

- Proportionality: Ensuring that legal consequences are proportionate to the relevant actions or issues.

In conclusion, while entities like OpenAI and constitutions like that of the United States are not formal systems in the mathematical sense, the principles of axiomatic thinking are still deeply relevant to them. Gödel’s Incompleteness Theorems, though primarily concerned with mathematical logic, offer a potent analogy for understanding these structures. They suggest that any system, no matter how robustly designed, might harbor inherent limitations or unexpected pathways. Gödel’s analysis of the U.S. Constitution, where he speculated about a loophole that could theoretically lead to a dictatorship, is a case in point. It illustrates how even the most meticulously crafted systems can contain unforeseen consequences or vulnerabilities.

In the context of OpenAI, particularly with the advent of advanced AI models like GPT-4 and the anticipated GPT-5, this analogy takes a significance. These AI systems, embedded within complex organizational and legal structures, have far-reaching impacts on humanity. Their governance and decision-making processes, though not strictly axiomatic systems, are subject to similar principles of foundational assumptions, rule-based inferences, and the potential for unexpected outcomes.

Therefore, it is necessary to engage in careful deliberation and debate regarding the decentralized (or less centralized) governance and decision-making surrounding entities like OpenAI. This discussion is not just a matter of legal and organizational necessity but also a fundamental requirement to anticipate, understand, and mitigate the profound implications these powerful AI systems have on society. As we embark on this era of unprecedented technological advancement, we must apply the lessons from axiomatic systems and Gödel’s insights to ensure that these AI entities operate in a manner that is ethical, transparent, and ultimately beneficial to all of humanity.

The Power of AI through the lens of Gödel’s work

Kurt Gödel, born on April 28, 1906, was an Austrian mathematician, logician, and philosopher. He made significant contributions to the fields of logic, mathematics, and computer science, leaving a lasting impact on our understanding of the foundations of mathematics and the nature of computation.

Gödel’s most significant achievements are his incompleteness theorems, which revolutionized the field of logic and mathematics.

- First Incompleteness Theorem: In any consistent, formal axiomatic system that is strong enough to include arithmetic, there exist statements that can be neither proven nor disproven within the system. In other words, there will always be undecidable statements or propositions within such systems.

- Second Incompleteness Theorem: No consistent formal axiomatic system can prove its own consistency. This implies that if a system is consistent, it cannot be used to prove that it is free from contradictions or inconsistencies.

These theorems shook the foundations of mathematics, revealing the inherent limitations of formal systems, as they implied that there would always be undecidable statements and limitations within any axiomatic system. This was a death knell to David Hilbert’s formalist program, which aimed to provide a complete, consistent, and decidable foundation for all of mathematics.

The Church-Turing thesis, posits that any function that can be computed by an algorithm can be computed by a Turing machine, effectively defining the boundaries of computability. It provides a formal framework for understanding the limitations of algorithmic computation, just as Gödel’s incompleteness theorems did for formal axiomatic systems (like arithmetic). Turing’s development of the Universal Turing Machine, a theoretical device capable of simulating any other Turing machine, provided a way to demonstrate the undecidability of the halting problem, which asserts that it is impossible to determine, whether a given program will halt or run indefinitely. This result mirrors the inherent limitations revealed by Gödel’s incompleteness theorems, as both highlight the existence of uncomputable and undecidable problems within their respective domains.

As we embarked on the exploration of computability, completeness, and consistency within a structured framework, we discovered that the prevailing themes emerging from this inquiry are “uncomputable,” “undecidable,” and “incomplete”. In recent times, a new phenomenon has come into existence, Artificial Intelligence (AI), which appears to be taking over the world. AI, often asking audacious questions and seemingly possessing infinite knowledge, gives the impression of knowing everything and wielding immeasurable power. This remarkable technology has transformed various aspects of our lives, from automating mundane tasks to making complex decisions but it is not a panacea for all human challenges. It is crucial to recognize that, just like the systems studied in mathematical logic, AI is also bound by certain inherent limitations. The very principles of Gödel’s incompleteness theorems, which unveiled the boundaries of formal axiomatic systems, serve as a reminder that AI, despite its impressive capabilities, is not all-powerful.

This begs the question, “What does power mean”?

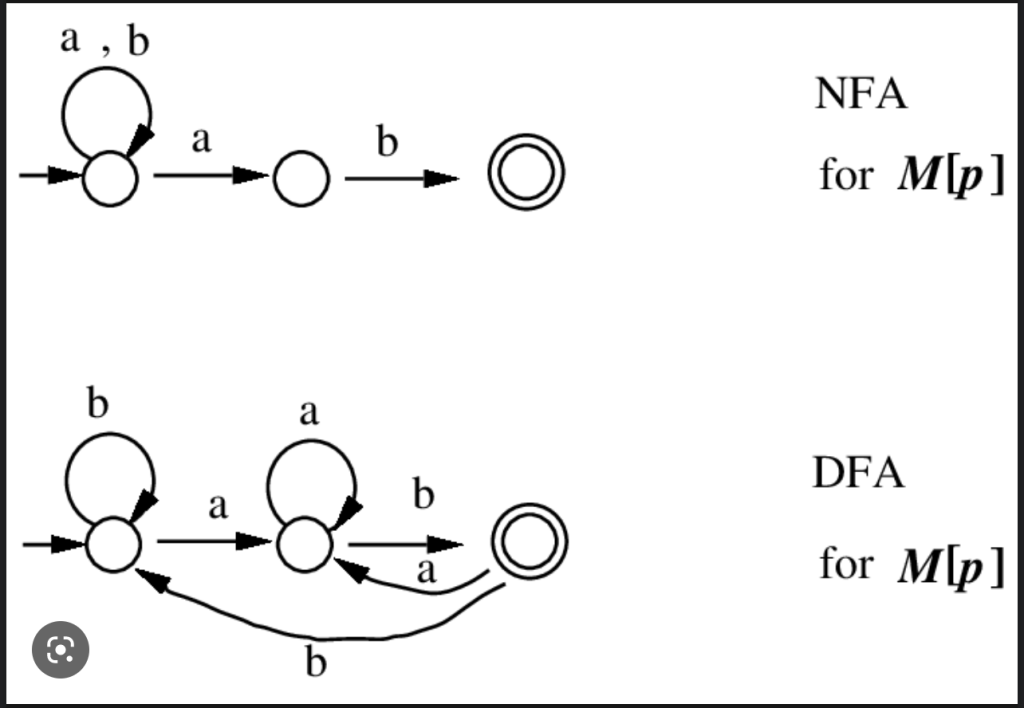

To illustrate this, I will reiterate that DFA and NFA are of equal power. It is important to note that an equivalence exists between formal logic (propositional, 1st order, 2nd order etc…), languages/grammars (regular, CFG, CSG etc..), and the theory of automata/machines (DFA/NFA, PDA, Turing machine etc…). This equivalence signifies that statements made within one domain can be translated to another while retaining their original meaning and impact.

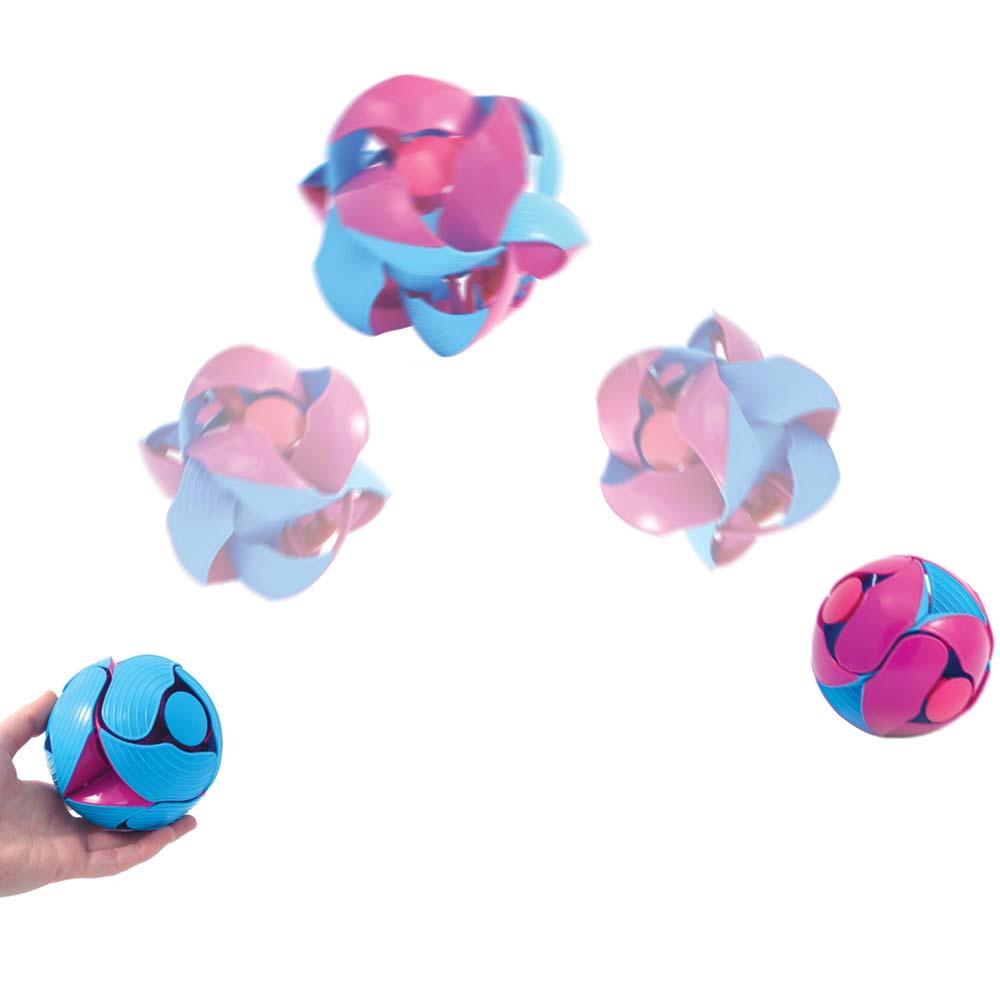

NFAs allow multiple transitions for each input symbol and can exist in multiple states simultaneously, DFAs have a single, uniquely determined transition for each input symbol. Despite the increased flexibility of NFAs, they possess the same computational power as DFAs, illustrating that having more options (or doing more in one time unit) does not always result in greater capabilities. This observation is similar to the advantage that a lever or a pulley offers in the realm of mechanics. These simple machines provide positional advantage, allowing a person to perform the same amount of work but more efficiently or with less effort.

This observation aligns with Gödel’s incompleteness theorems, which revealed the inherent limitations of formal axiomatic systems. It suggests that no matter how advanced AI becomes, it will always be subject to constraints arising from the fundamental nature of mathematics and logic (because those are the fundamental building blocks on which the AI is built). Just as the computational power of NFAs and DFAs is equivalent despite their differences, AI’s vast access to information and computational prowess does not guarantee its ability to conquer every problem or answer every question. Instead, AI remains subject to the same limitations as any formal system, reminding us that even the most powerful tools have boundaries and that human ingenuity and agency must continue to play a critical role.

To elucidate the concept further, “power” in the context of formal systems pertains to “expressiveness.”

- The more expressive a grammar is, the greater the power of the language it generates. For instance, the language of palindromes possesses less power than the language {a^n b^n c^n | n ≥ 1}. The latter exhibits a higher degree of expressiveness, as it can generate a broader range of patterns beyond palindromes, thereby demonstrating its superior capabilities in terms of language generation and complexity.

- The more powerful a logical system is, the better it can reason. If we take the first order and second order logic, while both systems use predicate symbols and quantifiers, they differ in their expressiveness and the types of objects they can quantify over. The former allows us to express relationships between objects, properties, and quantifiers while the later allows to do over relations and functions, which are more higher level objects

In the realm of Artificial Intelligence, “power” may be interpreted as “Inclusiveness.” A highly powerful AI system is characterized by its ability to embrace, consider, and act in a manner that fosters the well-being of all individuals, surpassing the limitations of conventional computational tools and models. As each person is on their own unique journey, AI aids by addressing survival tasks and empowering them to delve into introspective questions such as “What constitutes my well-being?”, “Where am I headed?”, and “Who am I?” By fostering this level of introspection and self-awareness, AI can truly claim to be more powerful!

As we celebrate Gödel’s birthday, we honor his enduring legacy, which continues to inspire researchers and thinkers across disciplines. His work remains a testament to the beauty and complexity of mathematical inquiry, challenging us to grapple with the limits of human understanding and to push the envelop in our quest for knowledge.

Entanglement, Double Slit, Observer, Mind, Freewill

I have been meaning to connect these dots for a while and this weekend took up to task to pen down the first draft

Nobel Prize in Physics 2022 on Entanglement

The Nobel Prize in Physics 2022 was awarded jointly to Alain Aspect, John F. Clauser and Anton Zeilinger “for experiments with entangled photons, establishing the violation of Bell inequalities and pioneering quantum information science.” Quantum entanglement is a phenomenon in which pairs or groups of particles are generated or interact in ways such that the quantum state of each particle of the pair or group cannot be described independently – instead, a quantum state may be given for the system as a whole.

The violation of Bell inequalities is a test of the principle of local realism, which states that objects have definite properties even when they are not being observed. The experiments of Aspect, Clauser and Zeilinger showed that objects can be entangled, and that their properties are not determined until they are observed.

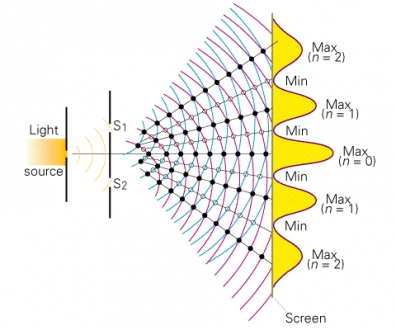

Double Split Experiment

The double-slit experiment is a fundamental experiment in quantum mechanics that demonstrates the wave-particle duality of matter and the probabilistic nature of quantum measurements. The experiment involves shining a beam of particles, such as electrons or photons, through two parallel slits onto a screen. The resulting pattern on the screen is an interference pattern, indicating that the particles behave as waves and interfere with each other. However, when the experiment is observed to determine which slit the particles pass through, the interference pattern disappears. “Copenhagen” interpretation indicates that the act of measurement collapses the wave function and forces the particles to behave like particles instead of waves, while the “Many Worlds” interpretation suggests that the act of observation creates a branching of the universe into multiple parallel worlds, each corresponding to a different possible outcome of the experiment. In this view, the wave-like behavior of the particles is not lost when they are observed, but rather continues in the other parallel worlds.

The Tenth Man story

The story goes like this, ten boys were studying with a guru and went on a journey together. Along the way, they realized that they were one man short. Each boy counted the others and found only nine. They searched high and low but could not find the tenth man. They were all very sad and returned to the guru to report that one of them was missing. The guru asked them to count again, but this time he told them to count themselves as well. When they did this, they found the missing tenth man – himself. The guru explained to them that the tenth man was never really missing, but was always there – it was just a matter of recognizing his own self

Mind

There exists a philosophy (yoga) that defines the architecture of mind as manas, chitta, buddhi, and ahamkara.

- Manas is the sensory or perceptual aspect of the mind. It is responsible for receiving sensory input from the environment and processing it into recognizable forms. Manas is often compared to a camera or a mirror that reflects the external world

- Chitta is the aspect of the mind that stores impressions or memories. It is responsible for retaining past experiences and creating a sense of continuity and identity over time. Chitta is often compared to a library or a computer hard drive that stores information

- Buddhi is the aspect of the mind that represents the higher intellect or discernment. It is responsible for making decisions based on logic, reason, and intuition. Buddhi is often compared to a judge or a guide that helps us navigate the world and make wise choices

- Ahamkara is the aspect of the mind that creates a sense of individuality or ego. It is responsible for creating a sense of “I” or “me” that separates us from others and the rest of the world. Ahamkara is often compared to a mask or a cloak that hides our true nature. This is the trickiest of the components that acts a veil on ones true nature while at the same time “I” also induces a sense of doer ship.

The study of quantum mechanics has led us to question our classical ideas of reality and has introduced concepts such as entanglement and quantum non-locality. The double-slit experiment has demonstrated that properties do not exist until they are measured and that the act of measurement itself can affect the outcome. The 10th man story reminds us that it is not just what we measure or observe, but also how we interpret it that matters. Ultimately, this interpreter has a mind, the one that induces doer-ship, and gives the illusion of choice and freewill.

Introspect the interpreter/observer to unlock the secrets and understand it nature. Based on the experimental data above, it appears that everything is connected to everything and that its only the interpreter/observer somehow comes into existence and makes it local and limited. But this is for each one of us to realize for ourselves.

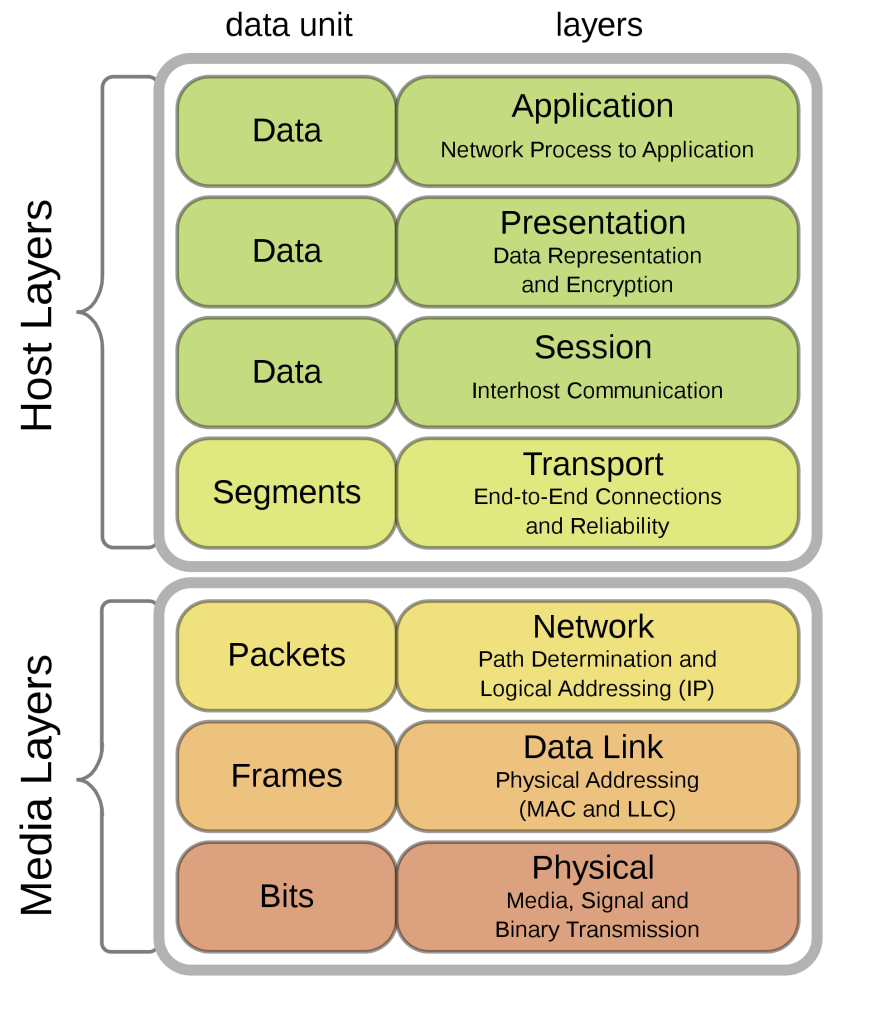

Telecommunications, 5G and Shannon Limit

Telecommunication – the transmission of signals over a distance for the purpose of communication. The engineering aspect of telecommunications focuses on the transmission of signals or messages between a sender and a receiver, irrespective of the semantic meaning of the message. The Open Systems Interconnection model (OSI model) is a conceptual model that characterises and standardises the communication functions of a telecommunication or computing system without regard to its underlying internal structure and technology.

Such an abstraction allows the functionality provided by layer-N to be defined in terms of Layer-(N-1). Communication protocols enable an entity in one host to interact with a corresponding entity at the same layer in another host. A communication protocol is a system of rules that allow two or more entities of a communications system to transmit information

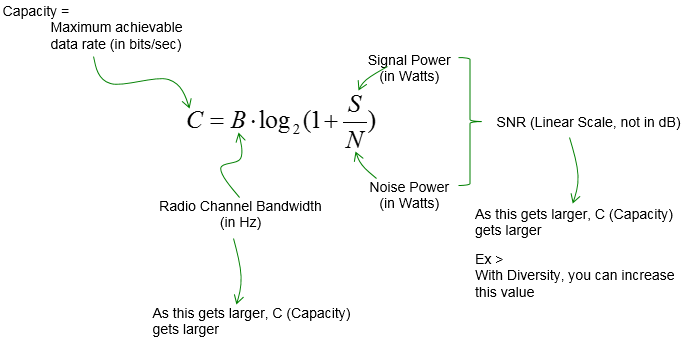

Information theory and Fundamental Limits: A revolution in wireless communication began in the first decade of the 20th century with the pioneering developments in radio communications by Guglielmo Marconi, including Charles Wheatstone and Samuel Morse (inventors of the telegraph), Alexander Graham Bell (inventor of the telephone), Edwin Armstrong and Lee de Forest (inventors of radio) and many others. Among these inventor stands out Claude Shannon who defined “information”, introduced a simple abstraction of human communication (channel) and showed that “Data” can only be transmitted so within constraints of time and quantity. (the constraints are a dependent on the medium (copper wire, fibre optics, electromagnetic, etc..) ). He came up with a mathematical basis of communication that gave the first systematic framework in which to optimally design telephone systems. The main questions motivating this were how to design telephone systems to carry the maximum amount of information and how to correct for distortions on the lines.

He used Boolean algebra–in which problems are solved by manipulating two symbols, 1 and 0–to establish the theoretical underpinnings of digital circuits that evolved into modern switching theory. All communication lines today are measured in bits per second, which is also used in computer storage needed for pictures, voice streams and other data. He came to be known as the “Father of Information theory”

5G: The 5 generations of wireless technology are as below. 5G makes a big statement with peak theoretical data rates of 20 Gbps for downlink and 10 Gbps for uplink.

- 1G: Voice only, analog cellular phones Max speed: 2.4 Kbps

- 2G: Digital phone calls, text messaging and basic data services Max speed: 1 Mbps

- 3G: Integrated voice, messaging mobile internet, first broadband data for an improved internet experience and use of applications Max speed: 2 Mbps

- 4G: Voice, messaging, high speed internet and high capacity mobile multimedia, faster mobile broadband Max speed: 1 Gbps

- 5G: A revolution in user experience, speeds, technology, connecting trillions of devices in the IoT, supporting smart homes, smart buildings and smart cities Max speed: 10 Gbps

However these are numbers as defined in the specifications. The perceived reality w.r.t speeds are lower and a more useful metric defined by the International Telecommunications Union (ITU) for the IMT-2020 standard (basically the 5G standard) is user experience data rate, which is the data rate experienced by users in at least 95% of the locations where the network is deployed for at least 95% of the time.

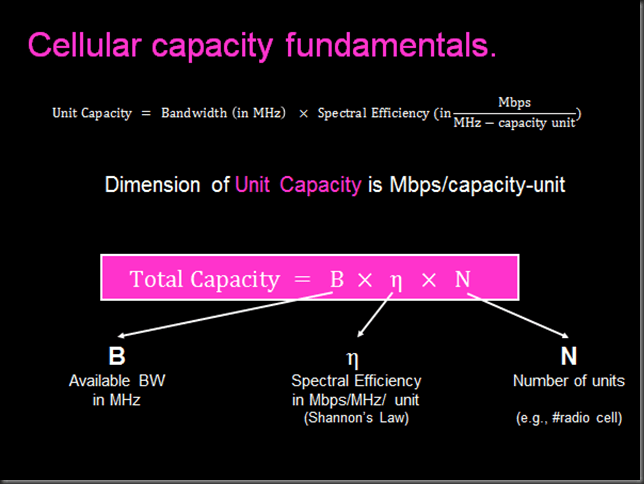

As cellular communication has progressed in the last two decades, we’ve rapidly approached the theoretical limits for wireless data transmission set by Shannon’s Law. The equation explores the relationship between the total data throughput capacity of a system, in terms of spectrum (radio frequencies), number of antennas and signal to noise ratio on the communication channel.

I have described a few components of 5G in this article. The physical properties of higher frequencies (millimeter Waves: 30 – 300 GHz) conjures up more Space, allowing more data to move across at a given instant. It opens up another decade or 2 of constantly pushing the limits of Shannon’s law and taking us into a new era of technology and experiences. I will try and explore this equation in the context of #5G in my subsequent posts.

5G shots

5G will be the most transformative tech of our lifetime. I will try and explain a few terms to demystify the landscape.

Millimeter Wave: An entirely new section of spectrum never used for mobile services. Millimeter waves are broadcast at frequencies between 30 and 300 gigahertz, compared to the bands below 6 GHz . They are called millimeter waves because they vary in length from 1 to 10 mm, compared to the radio waves that serve today’s smartphones, which measure tens of centimeters in length

Carrier Aggregation: Uses multiple frequency bands together and leverages them together. This means a user can simultaneously be connected (via device) with both 700 Mhz and 1900 MHz frequency of the spectrum and hence can better use all the network resources. Enables increased data speeds (more data to download or upload at a given instant) because there is more space (on the spectrum) for traffic to move around.

256 QAM and 4×4 MIMO: Quadrature amplitude modulation (QAM) is the name of a family of digital modulation methods and a related family of analog modulation methods widely used in modern telecommunications to transmit information. With this approach the carrier is able to pack a lot of more information in the same space without loosing on quality. This leads to speed and efficiency. Combine this with the 4×4 MIMO (multiple inputs multiple outputs) – which provides the ability layer the network (stacking up in another dimension) and also doubles the smart phone antennas – the amount of data that can be carried over at any instant along with the speed multiples many fold.

Full Duplex: With full duplex, a transceiver (within a cellphone) will be able to transmit and receive data at the same time while on the same frequency, doubling the capacity of wireless networks at their most fundamental physical layer

Small Cells: Portable miniature base stations that use very little power to operate and can be placed every 250 meters or so throughout cities. To prevent signals from being dropped, carriers may blanket a city with thousands of small cell stations to form a dense network that acts like a relay team, handing off signals like a baton and routing data to users at any location.

The features and benefits of 5G will evolve over time, with transformative changes coming over the next several years as standards for eMBB, Critical IoT, and Massive IoT use cases are developed by 3GPP. Use cases for 5G fall into three broad categories: enhanced mobile broadband, massive IoT, and critical IoT.

- Enhanced broadband will provide higher capacity and faster speeds for many of today’s common use cases. This includes fixed wireless access, video surveillance, enhanced experiences in brick-and-mortar retail locations, mobile phones and others.

- Massive IoT will support the scaling of machine-type communications. This solution will support health monitoring, wearable communication, fleet/asset management, inventory optimization, smart home, health monitoring, wearable communications and more.

- Critical IoT will enable new use cases that require ultra-reliable, low-latency communications. It is a geographically-targeted solution for smart factories, smart grids, telemedicine, traffic management, remote and autonomous drones and robotics, mobile bio-connectivity, interconnected transport, autonomous vehicles and more.

Quest for best-in-class dev tools and platforms

Best in class digital workforce was one our goals in 2019 and we made several strides in that direction. Developer productivity is one measure of this goal and in that spirit – of empowering the developers with best-in-class tools and productivity enhancers – I got a chance to evaluate Sourcegraph. In this article I want to share my journey of this evaluation and my experience, primarily from a developer’s point of view.

Sourcegraph is a code search, and web based code intelligence tool for developers. It offers all of its features at scale in a large “space”:

- Public service: [24 programming languages] x [All opensource repos ] x [All repo hosts = Gitlab, Github, Bitbucket, AWS Code Commit] x [all branches]

- Private service: [24 programming languages] x [All private repos on self hosted server] x [Gitlab, Github, Bitbucket] x [all branches]

My view point into the evaluation was along the 3 pillars of SourceGraph: Search, Review and Automation. Describing the rich feature set of SourceGraph is an exercise in itself, instead I will try and make a case for why this tool stands out and how it improves the productivity of a developer.

Code Search

Search: A developer while creating code, will have a need to look at a definition of method. Most of the times this definition may exist in the IDE (on laptop) and the search becomes a matter of remembering the name of the method and reach it using IDE’s search functionality. However it becomes trickier if one does not member the exact phrase or does not know what to look for and what if that snippet of code is not available on the local dev environment. One has to clone multiple repos in order navigate the definition, find references and complete the review. This is where I see Sourcegraph adds value where in, the developer by using advanced features like regex (that allows one to search a subset of the languages like Python, Go, Java), Symbol search (that enables searches only on variables and function names) and comby search (a more powerful search than regex that enables finding balanced parenthesis) is now empowered to perform (not limited to) the following actions, right in the browser:

- How an API should be invoked

- What is the impact of modifying an existing API

- Find variables starting with a specific prefix

- Find a function call and replace the argument (via comby search)

- Refactoring a monolith into microservice

- Learning new ways to write code from both internal private repos and opensource repos

- Learning enterprise standard ways to read tokens securely, pack PoP tokens in microservices and client side code

- Search through 1000s of private repos with GBs of data, where it is not always possible/efficient to clone them locally

- Where are the environment configs declared

- Find specific toggles across the source

- How to implement a given algorithm

- Use GraphQL APIs (code-as-data) to power internal telemetry around source code metadata

- What recently changed in the code about (feature, page, journey etc…) that broke it? One can search commit diffs and commit messages

- Use predefined searches curated for you or your team/organization. These can also be used to send you alerts/notifications when developers add or change calls to an API you own

- Search for instances of secret_key in the source

repo:^github\.com/gruntwork-io/ file:.*\.tf$ \s*secret_key\s*=\s*".+"SourceGraph enables all the above my offering search the spans over multiple code hosts (Github, Gitlab, Bitbucket and many other), multiple repos of each host, across all branches of a repo and across both Open Source repos and the privately held Enterprise Repos – it should be noted that searching across both open source and private enterprise repos is currently not possible with the same single search. It has to be 2 different search queries. Also searching code is not the same as searching text where if you search for “http request” on github, the results end up with a bunch of noise that includes “httprequest” and “http_request” as well. All of these contribute to shipping code faster.

Code Review

Review: With the advances in source code hosting tools like github and gitlab and the like, code reviews have become less geekier where the reviewer receives a notification of the review, opens the review in a browser which renders the diff, the reviewer comments, approves/denies the request and moves forward with the workflow, all inside a browser. Everyone realizes the importance of this phase/step which offers the opportunity to catch non-mechanistic patterns, avoid costly mistakes (logical/semantic errors, assumptions etc…) and is also a medium of knowledge transfer. SourceGraph goes a couple of steps further and empowers the reviewer with source code navigation right from the browser where you can extend and decorate code views using Sourcegraph extensions

- Navigate source code as if in an IDE. Hover mouse onto a method name to “Go to Definition”. This is a huge time saver and makes the process efficient (through their indexing algorithm)

- In a similar vein, in addition to seeing the definition of method, the reviewer might want to check who else is using/referencing it in order to assess the impact of the change. SG offers “Find references” in all repos that are indexed on the server.

- Note SG is self-hosted (tm/sourcegrapheval) in order to index all enterprise private repos, so the code never leaves the network. However for searching non-enterprise opensource code, there is a publicly available cloud service at sourcegraph.com. I would have loved the option where the private server falls back on the public server seamlessly, but may be SG team will make that available in the future releases.

- Corroborates the change with test coverage numbers and runtime traces. While the above 2 points (“Go to Definition” and “Find References”) constitute 80% of the use cases, the reviewer can feel more confident of the change when the review also offers test coverage numbers. SG offers that and goes one step further by making available trace performance numbers from runtime (via supported services that have to be enabled)

Workflow Automation

Automation: I discussed 2 pillars so far: Search and Review. Now think about performing those acts at enterprise scale with appropriate roles and visibility via workflows. SG offers a beta feature called Automation which does exactly that: remove legacy code, fix critical security issues, and pay down tech debt. Ability to create campaigns across thousands of repositories and code owners. Sourcegraph automatically creates and updates all of the branches and pull requests, and you can track progress and activity in one place. This is huge. Imagine that scale! This capability enables the following use cases:

- Remove deprecated code: Monitor for legacy libraries, and coordinate upgrading all the affected repos iteratively

- Triage critical issues

- Dependency updates

- Enable distributed adoption

- Reduce the cost and complexity of sunsetting legacy applications

Essentially, it offers the sum total of all code intelligence (enterprise wide) in a split second via its search! Upping the ante for developer experience. Here is a quick featureset comparison with other similar solutions: https://about.sourcegraph.com/workflow/#summary-feature-comparison-chart

Proof of Concept

Installation was super simple where the distribution was made available as a docker container that I deployed on one of our EC2s. I was also quickly able to integrate with Okta (our AuthN service) pretty seamlessly and my colleagues from whole enterprise were able to play around and tried some use cases. Once the adoption improves, I plan to deploy Sourcegraph on a Kubernetes cluster.

While integrating with Okta, I noticed a small issue with SAML handshake and when I mentioned it to the Sourcegraph team, they hopped on a call, helped debug it, made a quick change in the product and provided a release candidate with the hotfix which I was able to upgrade to in a matter of few mins w/o losing any of the configurations done, thus far. Loved the experience!

Whats Next

A developer platform is the one place where Developers and DevOps teams go to answer questions about code and systems. It ties together information from many tools, from repositories on your code host to dependency relationships among your projects and application runtime information.

-Sourcegraph

I am encouraged to see how it fits into T-Mobile’s strategy of creating an environment that facilities rapid experimentation and enabling faster change with a goal of empowering the developers and devops to build better software faster, safer and healthier.

In that spirit, T-Mobile made crucial moves in the last few years, towards optimizing the Continuous Delivery Platform. From custom CICD processes on prem, to industry standard processes on hosted solutions on prem, to cloudbased gitlab, a onestop shop for source code management, devops lifecyle along with devsecops. In Nov 2019, Sourcegraph and Gitlab announced (relevant MR) native integration offerring a big improvement to the developer UX. Although the Sourcegraph browser extension will continue work, the integration with GitLab simply means that developers unwilling to install browser extensions are able to enjoy valuable features seamlessly integrated with their GitLab workflow.

Note: The enterprise private repositories still have to be hosted on a private sourcegraph server, just that the experience is now delivered natively via gitlab workflows and not via browser plugins.

Given my positive experience and the immense potential, I plan to recommend Sourcegraph to our procurement team, in the hope of making it a reality to all Devs, Devops and Devsecops teams at T-Mobile.

Blog! Why bother?

Its been on my mind – for a few months now – to revive my interest in blogging and up my ante around this act. Given the hiatus, topic selection proved harder than I first thought. A few topics sifted through my mind as possible candidates, but my train of thought wandered through multiple paths, viewpoints, perspectives, all of which made me to question, “Why am I doing this”.

I wanted to take a step back and reflect, why I should be blogging and after spending the time and effort whether it would still have the same impact, as I thought/assumed it would. Times have changed, information consumption patterns have evolved, is traditional blogging one of the authentic distribution channels? What do I want to achieve? A few answers came

- Over the years, I have acquired skills through my own experiences and I had like them to find expression.

- I had like to argue a viewpoint and/or debate the pros and cons of multiple perspectives of a given story.

- I want to reinforce my own knowledge on topics and figured that authentic writing is one of the ways to showcase expertise and in the process build my brand.

- I want to build a community of like minded people, debate and bounce ideas off of them, kindred spirits having a lot in common.

- I want to keep the experience educational and fun where my articles are a measure of my progress and concrete enough so that it helps others who are on a similar journey.

- I had like to publish articles on topics like Digital Transformation, and Programming to start with.

When it comes to delivering an idea, I still believe a written blog is a good medium (compared to audio and video) because that text is searchable, less maintenance and less noise. However there are a plethora of platforms like medium, wordpress, linkedin, dev.to, and many others related to one’s niche skillset. I am not entirely clear on how to manage this other than to publish the same content on all relevant platforms.

It also feels unwise to ignore platforms like youtube, twitch, soundcloud, podcasts, etc… so I would experiment them based on the context of the content. Experimentation is the key and I should find the right balance in due course.

So whats next? I want to pick one topic and blog to the end of it. Narrowing down on one, is not something that comes easily to me, given my “generalist” nature (a blog topic by itself for another day). It may be tactful and practical to identify parts of my daily work and blog about my journey in accomplishing them. So stay tuned!

Startup Weekend Seattle Space

Our team “Star Trial” won the 2nd prize for #pitching and creating #businessplan for matching #aerospace companies with testing facilities that offer services to certify function of a gadget in #space. Built the team with members who were interested in similar cause and had great fun executing it. More importantly learned a lot. Great guidance from #johnsechrest and wonderfully organized by Michael Doyle Sean McClinton Stan Shull and graced by industry stalwarts like #DonWeidner #lisarich #russellhannigan #ronfaith and #aaronbird. Thanks to our #mentors @vladimir.baranov.newyork @joseph.gruber

One of the prizes is the “Voyager Golden Recordset” from Lockheed Martin

Startup Weekend Seattle: Space Edition

Startup Weekend

Startup Weekend Seattle

#SUWSEASPACE #SUWSeattle

#space #newspace #hackathon #businessplan

To Think or Not to Think!

To “think” or not to “think”. Wait a sec, thats a thought too! 🙂 … Just be!